The AI landscape is evolving rapidly, and seamless voice interaction is becoming a reality, enabling natural, real-time conversations between users and systems. MinMo, developed by the FunAudioLLM team at Alibaba, is a groundbreaking multimodal large language model (LLM) designed for voice interaction. It sets new benchmarks in both voice comprehension and generation while preserving the text-based LLM’s capabilities.

What Makes MinMo Unique?

Unlike traditional models, which either focus on speech or text, MinMo bridges the gap by training on 1.4 million hours of diverse speech data, spanning tasks like speech-to-text (S2T), text-to-speech (TTS), and speech-to-speech (S2S). This extensive dataset enables MinMo to achieve state-of-the-art (SOTA) performance across multiple benchmarks.

Key Features:

- Full-Duplex Interaction: Enables simultaneous two-way communication, with low latencies of ~100ms for S2T and ~800ms for full-duplex.

- Instruction-Following Capabilities: Allows fine control over speech generation, including emotions, dialects, and speaking rates, and can even mimic specific voices.

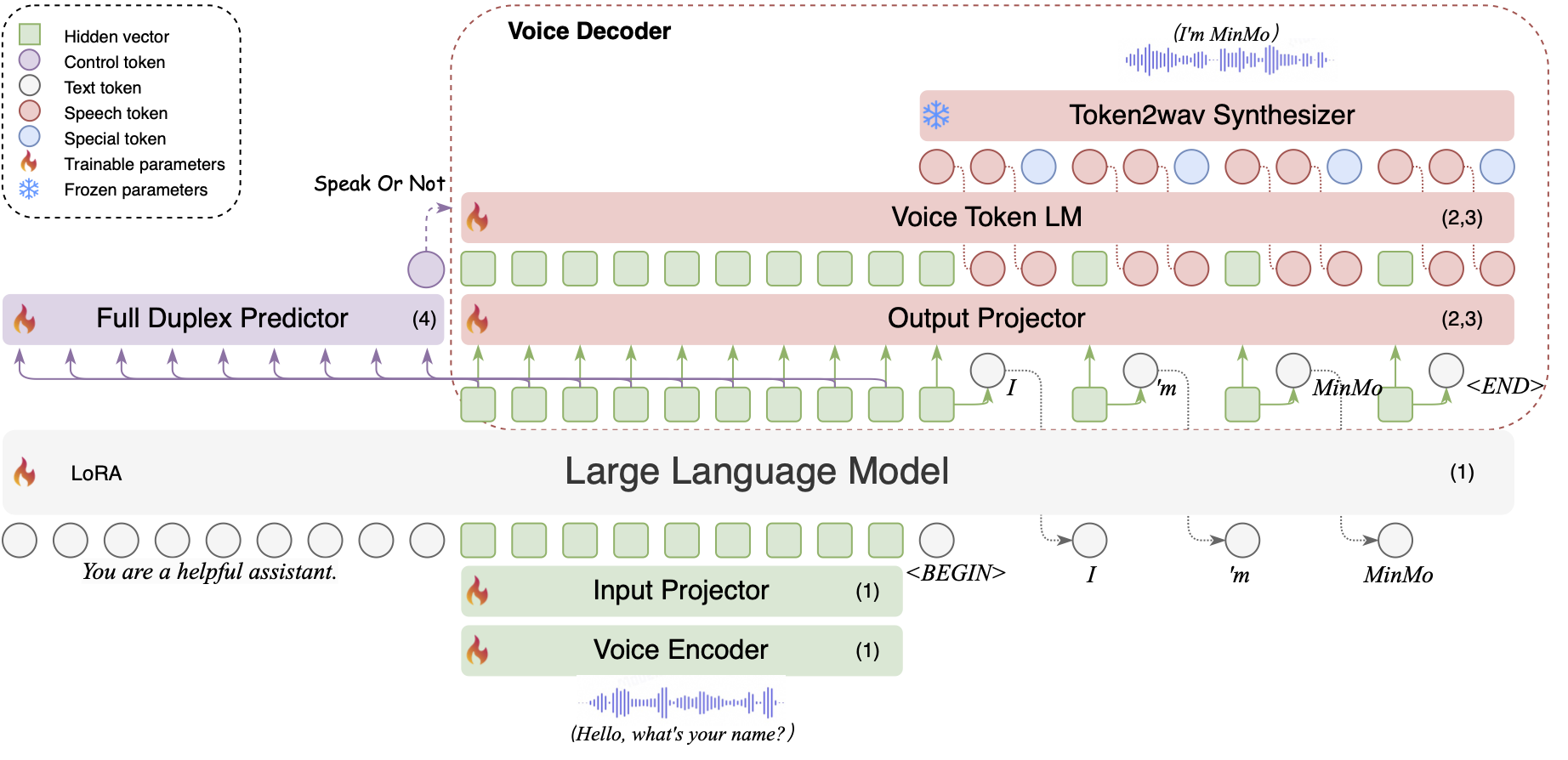

- Advanced Voice Decoder: Incorporates an autoregressive streaming transformer, ensuring efficient, natural voice generation.

Addressing the Challenges in Multimodal Models

1. Native Models vs. Aligned Models

- Native Multimodal Models: These models process speech and text simultaneously but face challenges like sequence length discrepancies and catastrophic forgetting of text capabilities.

- Aligned Models: Preserve text LLM capabilities but are often limited in training data and lack extensive evaluations on diverse tasks.

MinMo overcomes these limitations through a multi-stage training process:

- Speech-to-Text Alignment

- Text-to-Speech Alignment

- Speech-to-Speech Alignment

- Duplex Interaction Alignment

This method ensures that MinMo achieves unparalleled performance while retaining the robustness of the underlying text LLM.

Real-World Capabilities

MinMo excels in:

- Automatic Speech Recognition (ASR): Outperforms models like Whisper-large-v3 in multilingual ASR tasks.

- Speech-to-Text Translation (S2TT): Demonstrates top-tier BLEU scores across major benchmarks like Fleurs and CoVoST2.

- Speech Emotion Recognition (SER): Achieves nearly perfect accuracy on datasets like CREMA-D and CASIA.

- Full-Duplex Conversations: Provides real-time, human-like dialogues with intelligent turn-taking.

Why MinMo Matters

MinMo redefines multimodal AI by enabling seamless voice interactions in various applications:

- Customer Support: Empathic and real-time assistance powered by SER and duplex interaction.

- Language Translation: Accurate and nuanced translations between speech and text in multiple languages.

- Accessibility: Enhances communication tools for users with disabilities.

What’s Next for MinMo?

The FunAudioLLM team plans to make MinMo open-source, inviting developers to innovate and expand its applications. Future work includes:

- Extending Language Support: To include low-resource languages.

- Optimizing Duplex Latency: Making interactions even faster and smoother.

- Expanding Dataset Diversity: To train on more naturalistic and diverse speech scenarios.

Be First to Comment