The Architectural Superiority of Java for AI Apps

When discussing Java for AI apps, we cannot ignore the architectural advantages provided by the Java Virtual Machine (JVM). Unlike interpreted languages, Java for AI apps benefit from Just-In-Time (JIT) compilation. This means that as JVM-based artificial intelligence run, the JVM analyzes the code and optimizes it for the specific hardware it’s running on. For complex neural networks and data processing pipelines, this translates to a massive performance boost over time.

Furthermore, the JVM’s advanced memory management is crucial for JVM-based artificial intelligence interacting with Large Language Models (LLMs). While Python often hits a “memory wall” during heavy inference, JVM-based artificial intelligence utilize Project Loom and virtual threads to handle the massive concurrency required for LLM-powered chatbots and real-time agentic workflows. This architectural efficiency ensures that JVM-based artificial intelligence remain responsive even when processing high-token-count requests, making Java the definitive choice for the next generation of generative AI infrastructure

Multithreading and Parallelism in Java for AI Apps

Modern AI requires immense parallel processing power. Java for AI apps leverage sophisticated multithreading capabilities and the “Fork/Join” framework, allowing developers to split heavy AI tasks across multiple CPU cores with ease. This is a game-changer for Java for AI apps that need to process real-time data streams or handle thousands of concurrent user queries in an enterprise chatbot environment.

Security: The Enterprise Mandate for Java for AI Apps

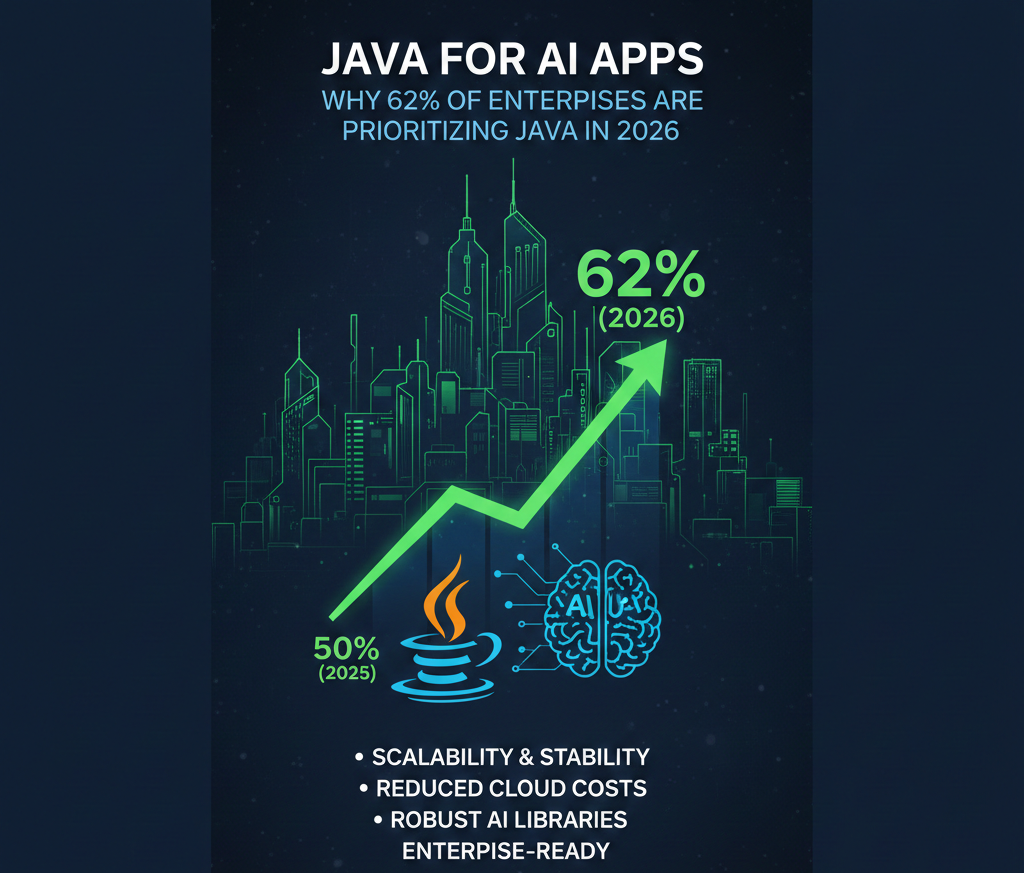

Security is often the “missing piece” in AI conversations, but not for Java for AI apps. As 62% of enterprises move toward production, the inherent security features of the Java platform become a primary selling point. Enterprise Java AI solutions benefit from:

- Strong Typing: Reducing runtime errors and vulnerabilities in complex Java for AI apps.

- Sandboxing: The ability to run untrusted AI model code in a restricted environment.

- Automatic Memory Management: Preventing the buffer overflow attacks that can plague lower-level languages used in Enterprise Java AI solutions.

By choosing Scalable AI workloads in Java, Chief Information Security Officers (CISOs) can sleep better knowing their AI infrastructure follows the same rigorous security protocols as their banking and healthcare backend systems.

Strategies for Migrating to Java for AI Apps

Many organizations ask: “How do we transition from our Python prototypes to Scalable AI workloads in Java?” The 2026 Azul report suggests a hybrid approach is the most common path to success.

1. The Wrapper Method

Most enterprises start by wrapping their existing models in AI-powered Java ecosystems. This allows the heavy lifting of data orchestration and user management to be handled by the JVM while the model itself remains in its original format.

2. Native Porting with DJL

For maximum performance, teams are increasingly porting models directly into Java-driven machine learning models using the Deep Java Library (DJL). This removes the “Python overhead” entirely, allowing Java for AI apps to communicate directly with the underlying hardware (GPU/TPU).

3. Microservices Architecture

By deploying AI functionality as independent microservices within a Java for AI apps ecosystem, companies can scale specific AI components without needing to scale the entire application. This modularity is a hallmark of successful Java for AI apps in 2026.

The Human Element: Empowering Developers with Java for AI Apps

One of the most surprising trends in the 2026 survey is how Java for AI apps are actually making developers more productive. With 30% of code now being generated by AI tools, the verbose nature of Java—once considered a drawback—has become an advantage. AI coding assistants are exceptionally good at writing boilerplate for Java for AI apps, allowing human developers to focus on high-level AI logic and integration.

Furthermore, the “Write Once, Run Anywhere” (WORA) philosophy applies perfectly to Java-driven machine learning models. A developer can build Java-driven machine learning models on a laptop and deploy them to a massive Kubernetes cluster in the cloud without worrying about library version conflicts or OS-specific bugs.